Until recently I was running my blog on Linode using Docker Swarm. This week I migrated over to Kubernetes on DigitalOcean.

After doing a comparison between running Kubernetes and Swarm a few years back, I decided in favor of the latter. Swarm is incredibly lightweight and, at the time, it was different enough from what I was doing day to day to be interesting. Further, I’m cheap. I could effectively run my blog, its database, and a few other apps on a $5/mo Linode instance. There weren’t good options, besides GKE, for managed Kubernetes at the time, either. I didn’t want to manage a Kube control plane.

Fast forward a few years: we’re in a very different situation. When DigitalOcean announced their Kubernetes offering, I decided to take it out for a spin. I decided that, even though it’s more expensive, there’s more learning value in running my blog on Kube than there is in running it on Docker Swarm. Let’s be honest, I’m not writing this blog because I expect to get famous. Running this site is all about learning value for me. This is part one of what I’ve learned so far.

Pricing Your Expectations

I don’t use Amazon Web Services or Google Cloud for my personal projects. The utility pricing those providers have is great for micro-usage or companies that have the budget to write big checks. I, however, am always going to strongly favor predictability. Even though I am probably spending more on DigitalOcean than I would at Amazon or Google, it would take a lot to cause that dollar amount to increase.

I never expect to end up on the front page of Hacker News, but it’s nice to know that if I do I don’t have to be too concerned with what the impact on my wallet is going to be.

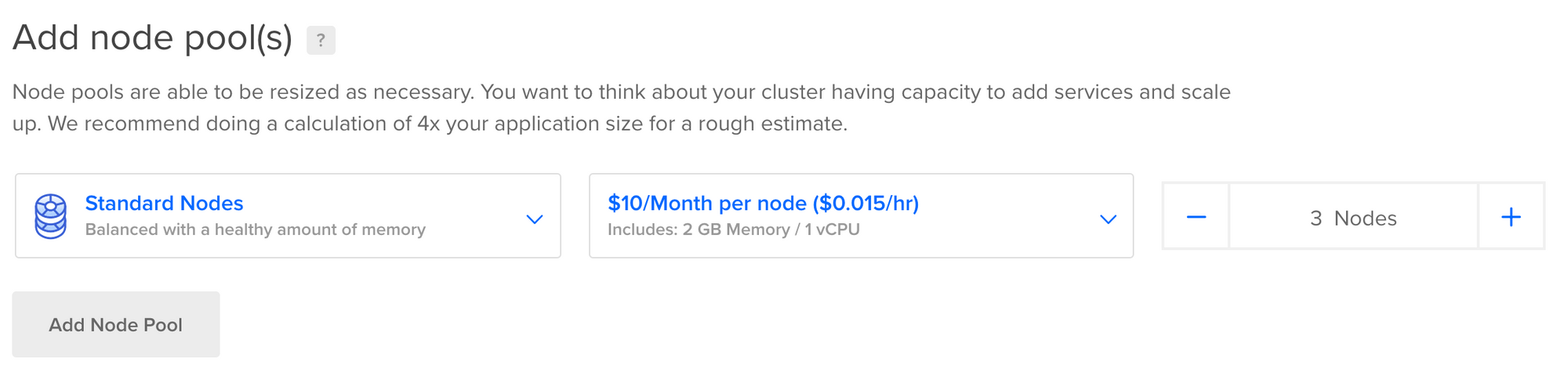

When configuring a Kubernetes cluster on DigitalOcean you configure what are called Node pools.

For my personal cluster I opted for the two of the Flexible Nodes. My selection was $15/month for 2vCPU and 2GB of memory for each node.

In addition to this, you pay a per GB cost for each Volume you create on DigitalOcean. When you deploy a Kubernetes cluster on DigitalOcean, this is all abstracted behind the Kubernetes concept of PersistentVolumeClaims.

When you create a new PersistentVolumeClaim in Kubernetes with the class do-block-storage - DigitalOcean will create a Volume on the backend. These volumes ensure that data outlives any individual node if that node gets destroyed. At $0.10 per GB reserved, my usage adds about $1 per month to my bill. ( Note: You pay for GB reserved, not GB used. So if you reserve 10 GB, that’s what you pay for.)

I also have a LoadBalancer service declared in Kubernetes for Traefik - the service responsible for routing incoming requests to the right backend running in the cluster. On DigitalOcean Kube, this creates a DigitalOcean Load Balancer, which costs $10/month. You could save this cost by using a Floating IP and host ports in Kubernetes, but Floating IPs don’t automatically move when a node goes down.

Finally, I’ve subscribed to DigitalOcean Spaces for $5/month. I’m using Spaces to host image assets for the blog. Previously, image assets were loaded from the blog’s server directly. Unfortunately, there was a performance hit to doing this. Images are many times larger than the text that you transfer from my server. Using Spaces I can offload that work to DigitalOcean and, bonus, I can utilize their Content Delivery Network (CDN) to get the images to load really quickly no matter how far away you are from my actual servers.

Totaling everything up:

- Compute Nodes — $30/month

- Load Balancer — $10/month

- Volumes — $1/month

- Spaces — $5/month

Total cost: $46/month.

That’s a pretty sharp increase from the $5/month that I was paying for my Swarm node. But this is all about the learning experience for me.

I’ve been learning a lot more from running on Kubernetes than I learned from running on Docker Swarm.